Azure & AWS Cloud Certification Roadmap

Recently, I have organised a virtual session on Azure and AWS cloud certification roadmap.

If you missed the session, refer the below video for complete session:

Azure App Service Backup Failure Alert

The Backup feature in Azure App Service allows us to easily create app backups manually or on a schedule. Refer this blog to know more about how to configure backup in Azure Web App:

https://www.iamashishsharma.com/2021/01/configure-backup-for-your-azure-app.html

In this article, we will discuss how to create an alert which will get triggered whenever backup of web app will fail.

Go to Alerts and create new alert rule.

Select your Azure web app for which you want to configure backup failure alert.

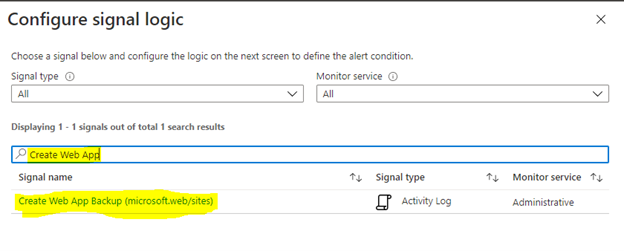

Click on Condition and Search “Create Web App Backup”.

Then change the Status to “Failed” and click on “Done”.

This will create a condition which will trigger whenever any backup will fail in Azure Web App.

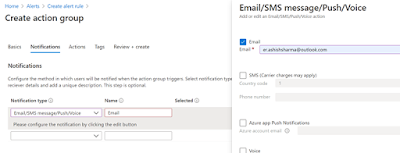

Click on Action Groups and then you can select a existing action group. Otherwise click on Create action group.

Select your subscription and resource group. Enter your action group name.

Select your Notification method. I have added Email in the notification type as shown below. You can also add Webhook etc from Actions tabs.

Then click on review and create.

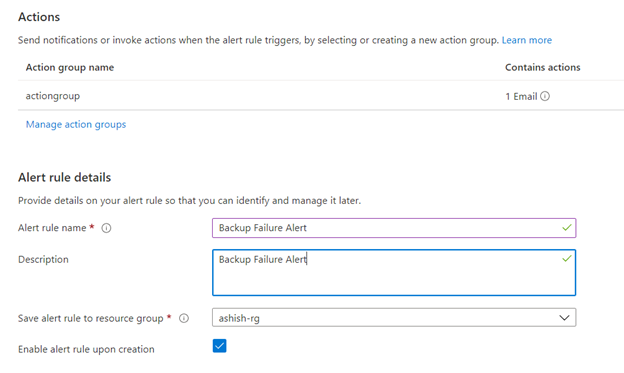

Enter your alert rule details such as alert name ,description and save alert to your resource group.

Click on Create alert rule.

This will create an alert rule which will get triggered whenever Azure web app backup will fail.

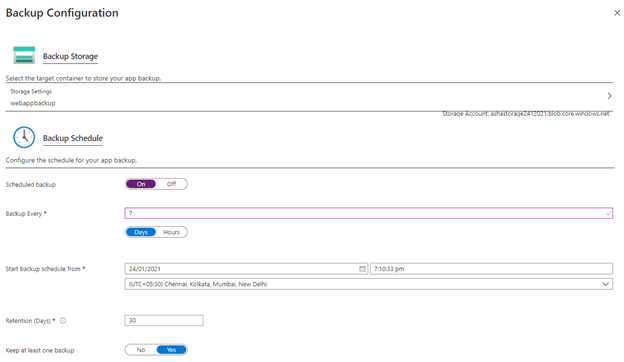

Configure a Backup for your Azure App Service

The Backup feature in Azure App Service allows us to easily create app backups manually or on a schedule. You can restore the app to a snapshot of a previous state by overwriting the existing app or restoring to another app.

Refer the below steps to schedule your backup:

1. Go to your App service and click on Backups from left Navigation bar.

2. Click on Configure and select your Azure storage account and container to store your backup. Then configure the schedule to start your backup as illustrated below.

3. Once everything is configured you can see backup status as shown below.

4. Once backup is succeeded, you can see the next scheduled backup details.

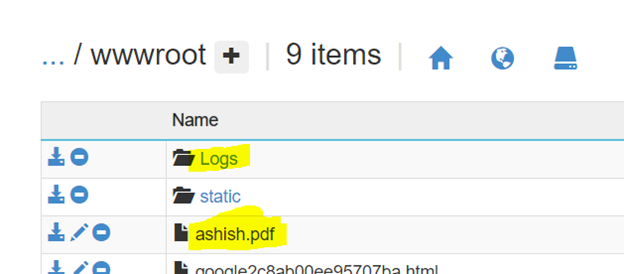

Exclude files from your backup

If you want to exclude few folders and files from being stored in your backup, then you can create _backup.filter file inside D:\\home\\site\\wwwrootfolder of your web app.

Let’s assume you want to exclude Logs folder and ashish.pdf file.

Then create _backup.filter file and add file & folder details inside the file as shown below.

Now, any files and folders that are specified in _backup.filter is excluded from the future backups scheduled or manually initiated.

Configure Azure web app backup using Powershell:

$webappname=\"mywebapp$(Get-Random -Minimum 100000 -Maximum 999999)\"

$storagename=\"$($webappname)storage\"

$container=\"appbackup\"

$location=\"West Europe\"

# Create a resource group.

New-AzResourceGroup -Name myResourceGroup -Location $location

# Create a storage account.

$storage = New-AzStorageAccount -ResourceGroupName myResourceGroup `

-Name $storagename -SkuName Standard_LRS -Location $location

# Create a storage container.

New-AzStorageContainer -Name $container -Context $storage.Context

# Generates an SAS token for the storage container, valid for 1 year.

# NOTE: You can use the same SAS token to make backups in Web Apps until -ExpiryTime

$sasUrl = New-AzStorageContainerSASToken -Name $container -Permission rwdl `

-Context $storage.Context -ExpiryTime (Get-Date).AddYears(1) -FullUri

# Create an App Service plan in Standard tier. Standard tier allows one backup per day.

New-AzAppServicePlan -ResourceGroupName myResourceGroup -Name $webappname `

-Location $location -Tier Standard

# Create a web app.

New-AzWebApp -ResourceGroupName myResourceGroup -Name $webappname `

-Location $location -AppServicePlan $webappname

# Schedule a backup every day, beginning in one hour, and retain for 10 days

Edit-AzWebAppBackupConfiguration -ResourceGroupName myResourceGroup -Name $webappname `

-StorageAccountUrl $sasUrl -FrequencyInterval 1 -FrequencyUnit Day -KeepAtLeastOneBackup `

-StartTime (Get-Date).AddHours(1) -RetentionPeriodInDays 10

# List statuses of all backups that are complete or currently executing.

Get-AzWebAppBackupList -ResourceGroupName myResourceGroup -Name $webappname

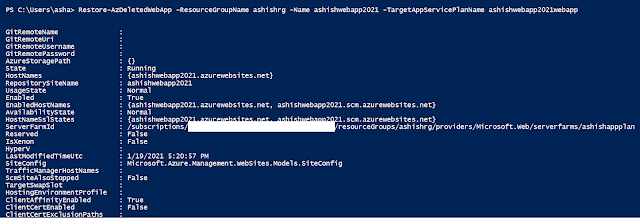

Restore a deleted Azure Web App

If you have accidently deleted your Azure Web App and looking to restore it, then refer the below steps to restore your Azure Web App.

1. Run the below Powershell to get the details of deleted web app.

Get-AzDeletedWebApp -Name <>

2. Restore the web app.

Restore-AzDeletedWebApp -ResourceGroupName <> -Name <> -TargetAppServicePlanName <>

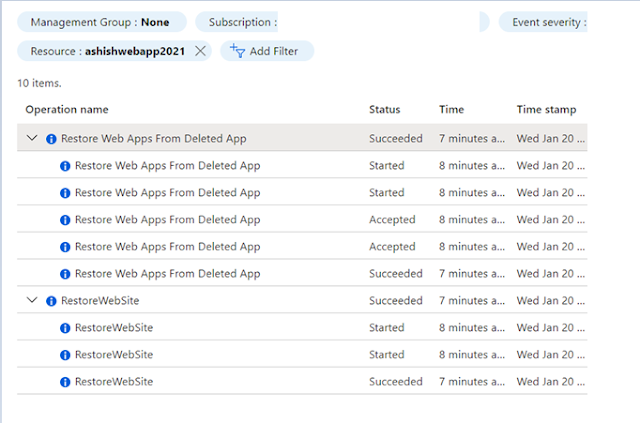

You can also check Activity logs of restored web apps as illustrated below.

Note:

1. Deleted apps are purged from the system 30 days after the initial deletion. After an app is purged, it can\’t be recovered.

2. Undelete functionality isn\’t supported for the Consumption plan.

3. Apps Service apps running in an App Service Environment don\’t support snapshots. Therefore, undelete functionality and clone functionality aren\’t supported for App Service apps running in an App Service Environment.

References: https://docs.microsoft.com/en-us/azure/app-service/app-service-undelete

Top 50 Microsoft Azure Blogs, Websites & Influencers in 2020

I am really glad to share that my blog is listed among Top 50 Microsoft Azure Blogs, Websites & Influencers in 2020. I am honored to be on this huge list next to other top contributors out of Microsoft Azure communities.

Azure Heroes: Content Hero & Community Hero Badger

Today I was awarded with 2 Azure Heroes: Content Hero badger & Community Hero Badger.

If you are not aware of what the Azure Heroes program is, let me explain it to you. Azure Heroes is a new recognition program by Microsoft, which recognizes the members of the technical community with digital badgers for meaningful acts of impact. It’s a blockchain-based recognition program where Microsoft collaborated with Enjin, this blockchain technology is being used for issuance and transactions which means that as a recipient of tokenised badger, you take the ownership of a digital collectible in the form of a non-fungible token (NFT).

Content Hero badgers are given out to those who share valuable knowledge at conferences, meetups or other events. The recipients of this rare award have created original content, sample code or learning resources and documented and shared their experiences and lessons to help others to build on Azure.

Community Hero badgers are given out for contributing materially by organising meetups or conferences or by sharing content and being an active member of the community.

Check other badger categories: Azure Heroes

Find All Azure Heroes: https://www.azureheroes.community/map

My Profile: https://www.azureheroes.community/user/11387

Security Recommendations for Azure App Services

In this article, we will cover the security recommendations that you should follow for establishing a secure baseline configuration for Microsoft Azure App Services on your Azure Subscription.

1. Ensure that App Service’s stack settings should be latest

Newer versions may contain security enhancements and additional functionality. Using the latest software version is recommended to take advantage of enhancements and new capabilities. With each software installation, organizations need to determine if a given update meets their requirements and verify the compatibility and support provided for any additional software against the update revision that is selected.

Steps:

1. Open your App Service and click on Configuration under Settings section.

2. Go to General Settings and ensure that your stack should be set to latest version. In the below example, our stack is PHP. Hence, we will select latest PHP version i.e. PHP 7.4

Similarly, in case you are using other stacks like .Net, Python, Java etc. then make sure it should set to latest version. Periodically newer versions are released for software either due to security flaws or to include additional functionality. Using the latest version for web apps is recommended to take advantage of security fixes, if any, and/or additional functionalities of the newer version.

2. HTTP version should be latest

Newer versions may contain security enhancements and additional functionality. Using the latest version is recommended to take advantage of enhancements and new capabilities. With each software installation, organizations need to determine if a given update meets their requirements and also verify the compatibility and support provided for any additional software against the update revision that is selected. HTTP 2.0 has additional performance improvements on the head-of-line blocking problem of old HTTP version, header compression, and prioritization of requests. HTTP 2.0 no longer supports HTTP 1.1\’s chunked transfer encoding mechanism, as it provides its own, more efficient, mechanisms for data streaming.

Steps:

1. Open your App Service and click on Configuration under Settings section.

2. Go to General Settings and ensure that HTTP version should be set to latest version. In the below example, the latest HTTP version is 2.0.

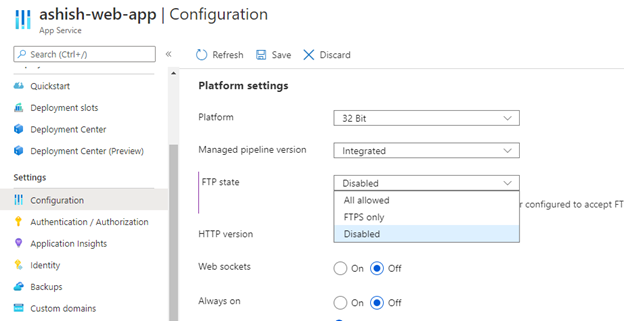

3. Disable FTP deployments

Azure FTP deployment endpoints are public. An attacker listening to traffic on a Wi-Fi network used by a remote employee or a corporate network could see login traffic in cleartext which would then grant them full control of the code base of the app or service. This finding is more severe if User Credentials for deployment are set at the subscription level rather than using the default Application Credentials which are unique per App.

Steps:

1. Open your App Service and click on Configuration under Settings section.

2. Go to General Settings and ensure that FTP state should not be All Allowed.

4. Enable Client Certificates mode

Client certificates allow for the app to request a certificate for incoming requests. Only clients that have a valid certificate will be able to reach the app. The TLS mutual authentication technique in enterprise environments ensures the authenticity of clients to the server. If incoming client certificates are enabled, then only an authenticated client who has valid certificates can access the app.

Steps:

1. Open your App Service and click on Configuration under Settings section.

2. Go to General Settings and ensure that Client certificate mode should be set to Require.

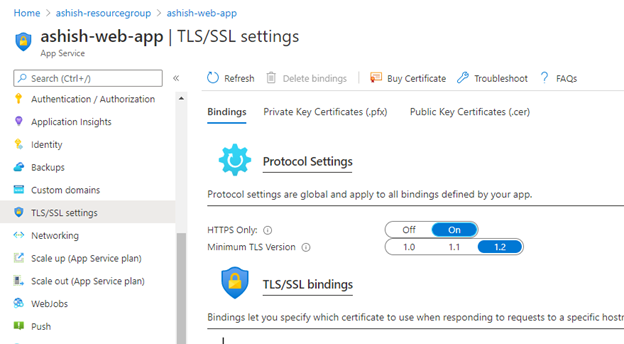

5. Redirect HTTP traffic to HTTPS

Enabling HTTPS-only traffic will redirect all non-secure HTTP request to HTTPS ports. HTTPS uses the SSL/TLS protocol to provide a secure connection, which is both encrypted and authenticated. So it is important to support HTTPS for the security benefits.

Steps:

1. Open your App Service and click on TLS/SSL settings under Settings section.

2. Go to Bindings and set HTTPS Only to ON.

When it is enabled, every incoming HTTP request are redirected to the HTTPS port. It means an extra level of security will be added to the HTTP requests made to the app.

6. Use the latest version of TLS encryption

The TLS(Transport Layer Security) protocol secures transmission of data over the internet using standard encryption technology. Encryption should be set with the latest version of TLS. App service allows TLS 1.2 by default, which is the recommended TLS level by industry standards, such as PCI DSS. App service currently allows the web app to set TLS versions 1.0, 1.1 and 1.2. It is highly recommended to use the latest TLS 1.2 version for web app secure connections.

Steps:

1. Open your App Service and click on TLS/SSL settings under Settings section.

2. Go to Bindings and ensure that TLS Version should be latest version. Here the latest version is 1.2.

7. Enable App Service Authentication

Azure App Service Authentication is a feature that can prevent anonymous HTTP requests from reaching the API app, or authenticate those that have tokens before they reach the API app.

By Enabling App Service Authentication, every incoming HTTP request passes through it before being handled by the application code. It also handles authentication of users with the specified provider(Azure Active Directory, Facebook, Google, Microsoft Account, and Twitter), validation, storing and refreshing of tokens, managing the authenticated sessions, and injecting identity information into request headers.

Steps:

1. Open your App Service and click on Authentication / Authorization under Settings section.

2. Set App Service Authentication to ON

If an anonymous request is received from a browser, App Service will redirect to a logon page. To handle the logon process, a choice from a set of identity providers can be made, or a custom authentication mechanism can be implemented.

8. Enable System Assigned Managed Identity

Managed service identity in App Service makes the app more secure by eliminating secrets from the app, such as credentials in the connection strings. When registering with Azure Active Directory in the app service, the app will connect to other Azure services securely without the need of username and passwords.

Steps:

1. Open your App Service and click on Identity under Settings section.

2. Set the Status to ON

References:

https://docs.microsoft.com/en-us/azure/app-service/web-sites-configure#general-settings

https://docs.microsoft.com/en-us/azure/app-service/app-service-authentication-overview

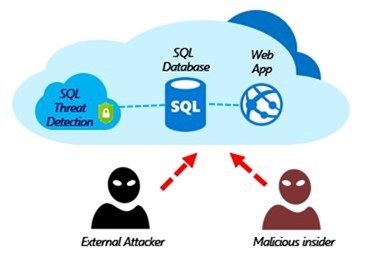

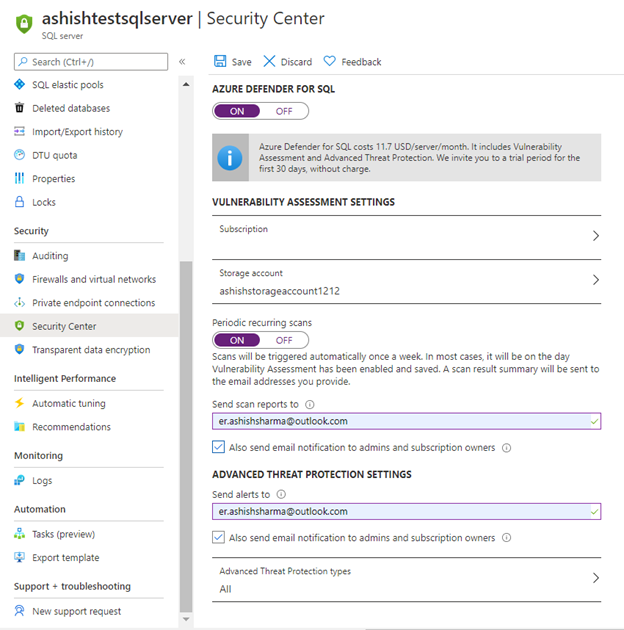

Security Recommendations for Azure SQL Database

In this article, we will cover the security recommendations that you should follow for establishing a secure baseline configuration for Microsoft Azure SQL Services on your Azure Subscription.

1. Enable auditing on SQL Servers & SQL databases:

Steps:

2. Enable threat detection on SQL Servers & SQL databases:

Steps:

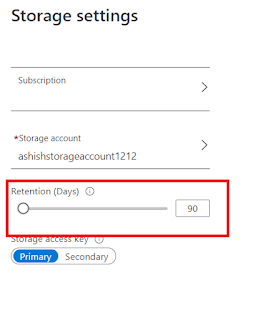

3. Configure Retention policy greater than 90 days.

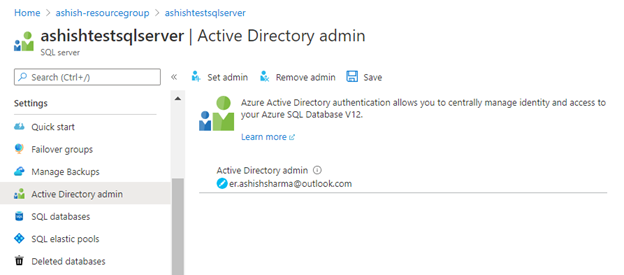

4. Use Azure Active Directory Authentication for authentication with SQL Database

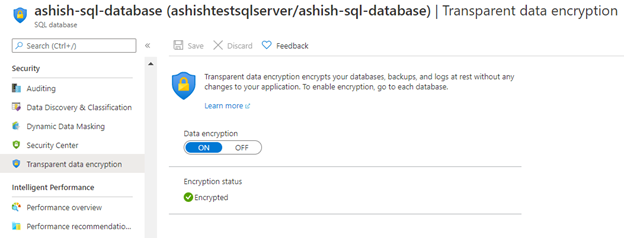

5. Enable Data encryption on SQL database

Steps:

- https://docs.microsoft.com/en-us/azure/sql-database/sql-database-threat- detection

- https://docs.microsoft.com/en-us/azure/sql-database/sql-database-auditing

- https://docs.microsoft.com/en-us/sql/relational-databases/security/encryption/transparent-data-encryption-with-azure-sql- database

- https://docs.microsoft.com/en-us/azure/sql-database/sql-database-auditing

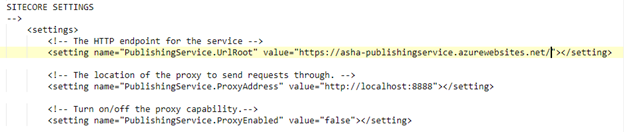

Configure Sitecore Publishing Service in Azure App Service

In this article, we will set up and configure Sitecore Publishing Service in Azure App Service.

1. Download Publishing service & module

You can download from dev.sitecore.com.

Sitecore Publishing Service: It is an opt-in module providing an alternative to the default Sitecore publishing mechanism, focusing on increased performance by doing operations in bulk. I have downloaded \”Sitecore Publishing Service 4.3.0\”.

Sitecore Publishing Service Module : It provides integration with the opt-in Publishing Service, supporting high-performance publishing in large scale Sitecore setups.

2. Install & Configure publishing service in Azure App Service

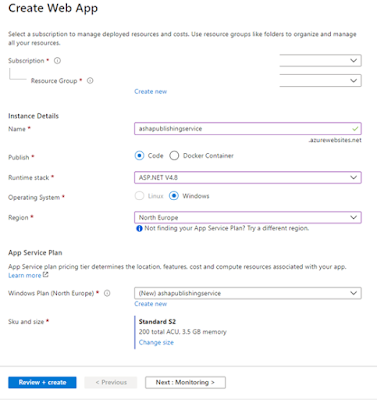

2.1 Create Azure App service.

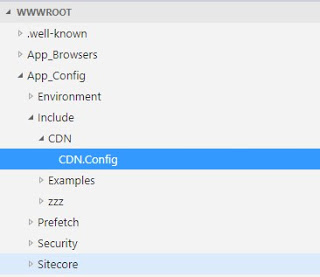

Once the App Service is created, open Kudu & navigate to Debug Consule -> CMD. Navigate to site\\wwwroot folder.

Drag & Drop zip folder “Sitecore Publishing Service 4.3.0-win-x64.zip” to kudu folder view area. You can unzip the folder using below command.

unzip -o *.zip

2.2 Run the below commands in Kudu CMD to set connection strings of core, web & master databases in publishing service.

Sitecore.Framework.Publishing.Host configuration setconnectionstring core \”\”

Sitecore.Framework.Publishing.Host configuration setconnectionstring master \”\”

Sitecore.Framework.Publishing.Host configuration setconnectionstring web\”\”

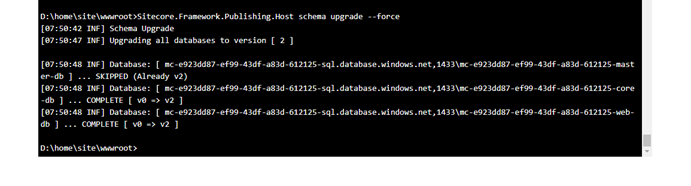

2.3 Upgrade the database schema for Sitecore publishing service using the below command.

Sitecore.Framework.Publishing.Host schema upgrade –force

2.4 To validate the configurations, navigate to browser and open the Publishing service URL as shown below:

http://<>.azurewebsites.net/api/publishing/maintenance/status

It should return “status”: 0 as illustrated below:

3. Configure Sitecore Publishing module in CD & CM instance

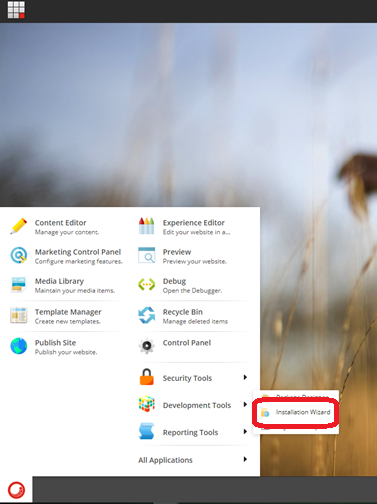

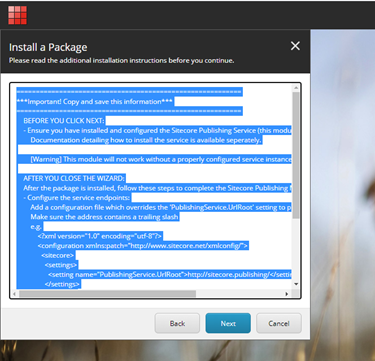

3.1 Login to CM instance and use Installation Wizard to install Publishing module.

3.2 Once the module is installed, open CM App service -> Open Kudu.

Navigate to App_Config/Modules/PublishingService folder and open Sitecore.Publishing.Service.config

Replace http://localhost:5000/ with the URL of the Publishing service App Service.

3.3 Now download Sitecore.Publishing.Service.Delivery.configfrom App_Config/Modules/PublishingService folder and the below dll from the bin folder from CM instance.

- Sitecore.Publishing.Service.dll

- Sitecore.Publishing.Service.Abstractions.dll

- Sitecore.Publishing.Service.Delivery.dll

3.4 Open the CD App service -> Open Kudu. Upload downloaded Sitecore.Publishing.Service.Delivery.config in App_Config/Modules/PublishingServicefolder and the downloaded dll in the bin folder.

Now you can see Publishing icon in Launchpad and on clicking it, you can see list of Active, Queued & Recent jobs in the Publishing dashboard.

If you have any feedback, questions or suggestions for improvement please let me know in the comments section.

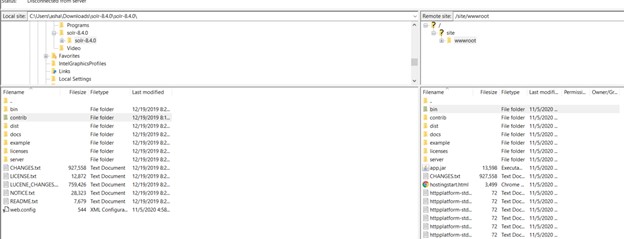

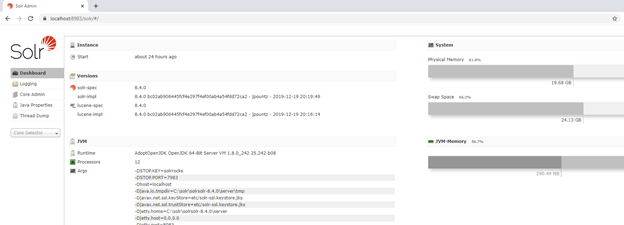

Install Solr as an Azure App Service

After Sitecore 9.0.2, Solr is a supported search technology for Sitecore Azure PAAS deployments. In this article, we will install SOLR service 8.4.0 in Azure App Service for Sitecore 10.

1. Create Azure App Service

Login to Azure and create Azure App service.

Make sure Runtime stack should be Java.

2. Download Solr

Download Solr 8.4.0 from https://archive.apache.org/dist/lucene/solr/

Extract the files and add the below web.config file in the Solr package.

<add name="httpPlatformHandler"

path=\”*\”

verb=\”*\”

modules=\”httpPlatformHandler\”

resourceType=\”Unspecified\” />

<httpPlatform processPath="%HOME%\\site\\wwwroot\\bin\\solr.cmd"

arguments=\”start -p %HTTP_PLATFORM_PORT%\”

startupTimeLimit=\”20\”

startupRetryCount=\”10\”

stdoutLogEnabled=\”true\”>

3. Deployment

You can use FTP to copy the local Solr files to the web root of the App Service.

Go to Deployment Center and copy the FTPS endpoint, Username & Password.

Once all the files will be copied, restart the App Service.

Browse the App Service URL, you will see the Solr Dashboard & you can use this Solr in Sitecore instances for indexing

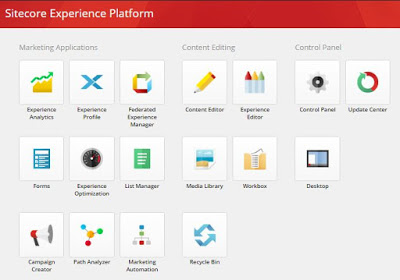

EXM not visible in Sitecore 10 Launchpad

After Sitecore 10 Installation, you might have seen EXM app is not visible in Launchpad.

References: https://doc.sitecore.com/users/exm/100/email-experience-manager/en/index-en.html

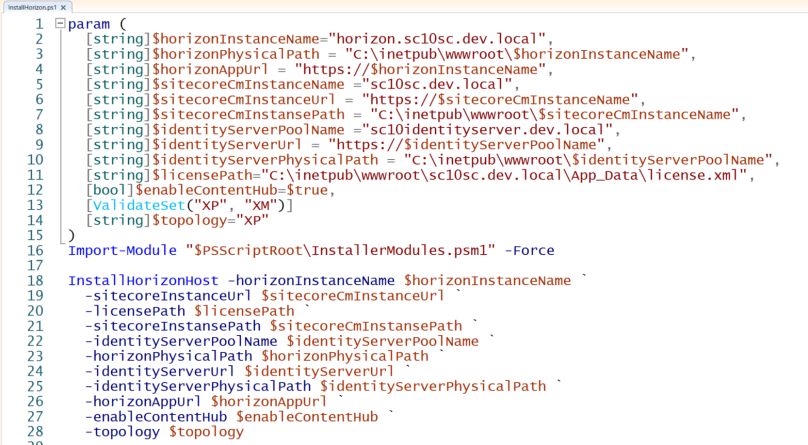

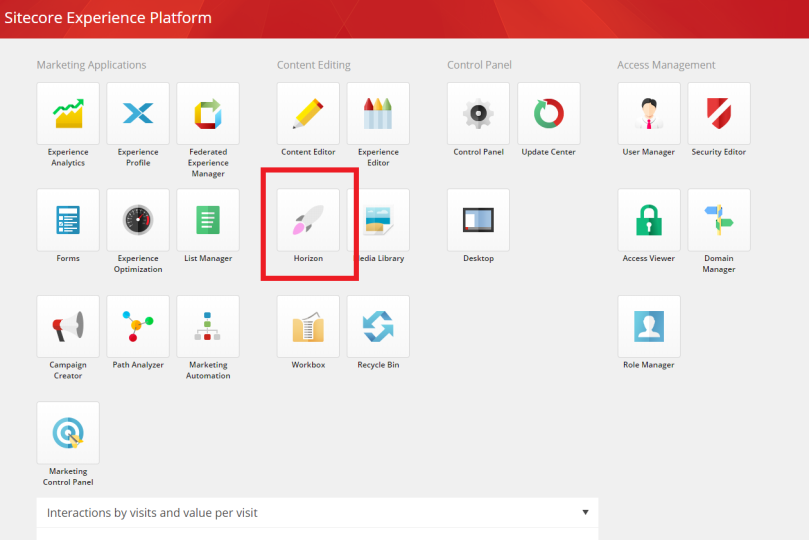

Install Horizon module in Sitecore 10

In this article, we will install Horizon module in Sitecore 10. To install Sitecore 10 using SIA, you can refer my this article: https://www.iamashishsharma.com/2020/08/install-sitecore-10-using-sitecore.html.

Refer the below steps to install Horizon module:

1. Download Horizon module from here.

2. Extract the downloaded zip folder and then open InstallHorizon.ps1into Windows PowerShell ISE in administrator mode.

Update the variables

$horizonInstanceName: Name of Horizone instance

$sitecoreCmInstanceName: Name of the Sitecore instance created in IIS via SIA

$identityServerPoolName: Name of the Sitecore identity instance created in IIS via SIA

$LicensePath: Path to where your licence file can be found

$enableContentHub: To enable Content hub then set it as true

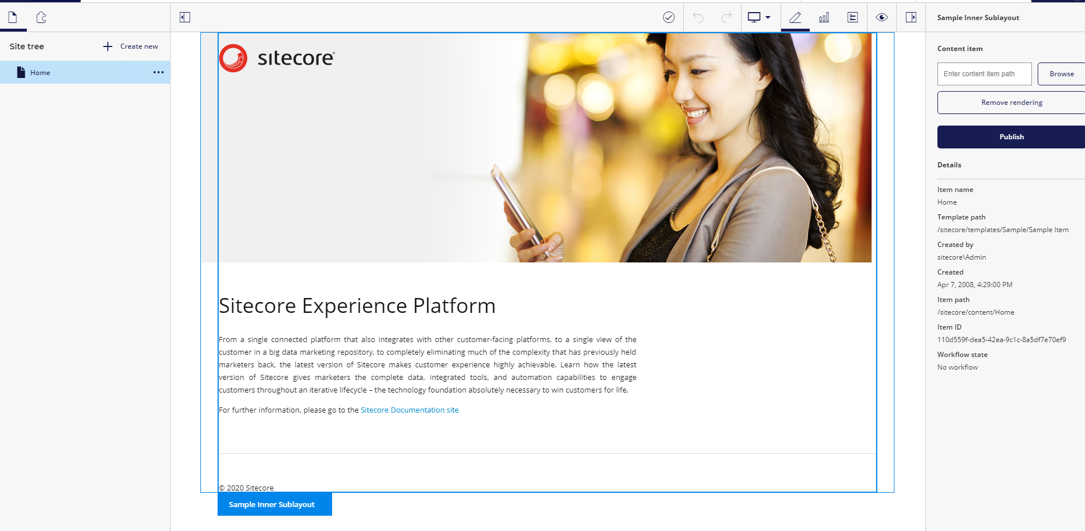

Once the script will be completed successfully, a new website horizon will be created. Login to Sitecore and you can see additional icon for horizon in launchpad. Click on the icon and you can see new Horizon website.

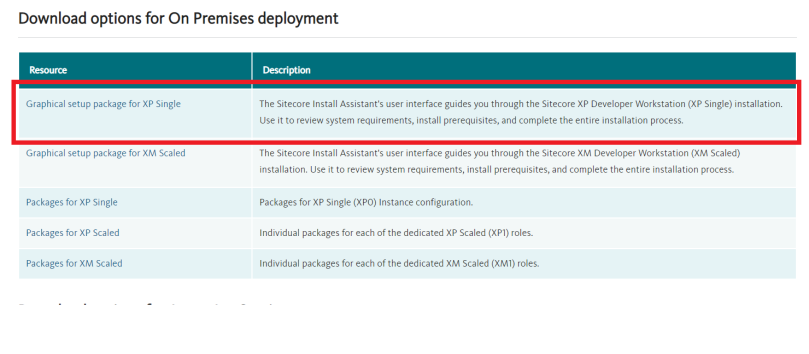

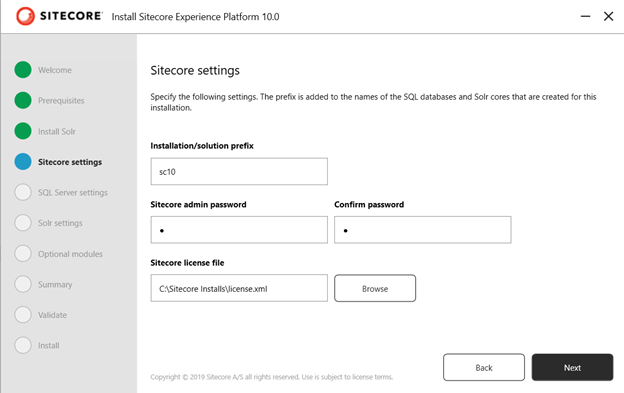

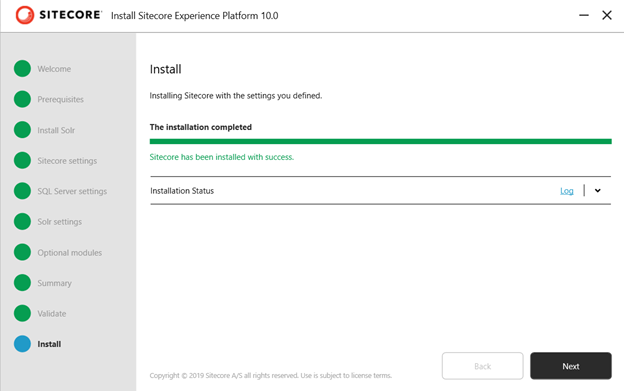

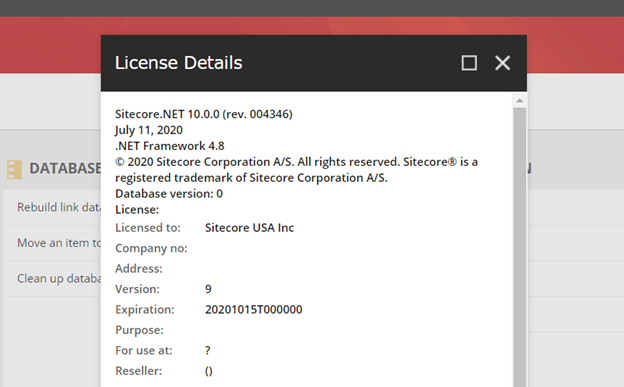

Install Sitecore 10 using Sitecore Install Assistant

This article will show the steps to install Sitecore 10 on your local machine using Sitecore Install Assistant.

1. Go to dev.sitecore.net page and download Sitecore 10. I have downloaded XP single but you can choose other options as well for on premise.

2. Extract the downloaded zip folder and then run the \”setup.exe\” file with Administrator.

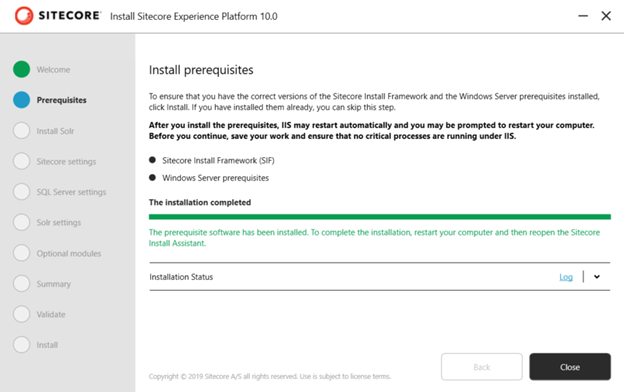

3. Click on Start button to start your installation.

4. Install Prerequisites to make sure the required SIF and Windows Server prerequisites are up to date.

5. Enter Sol port, prefix and path to Install Solr.

This will install Solr version 8.4.0. Browse your Solr url to make sure it’s running successfully.

6. Enter your Sitecore prefix, password and browse Sitecore license file.

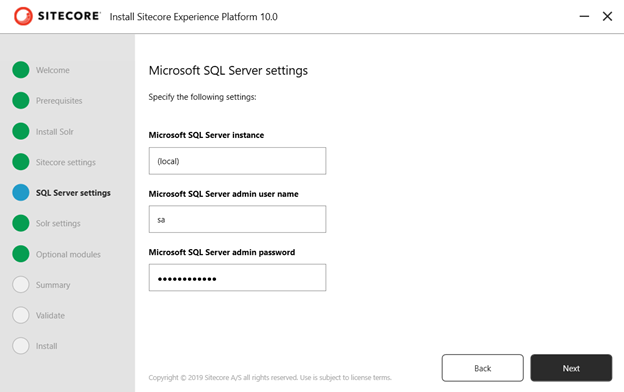

7. Enter SQL Server details.

8. Confirm your Solr details.

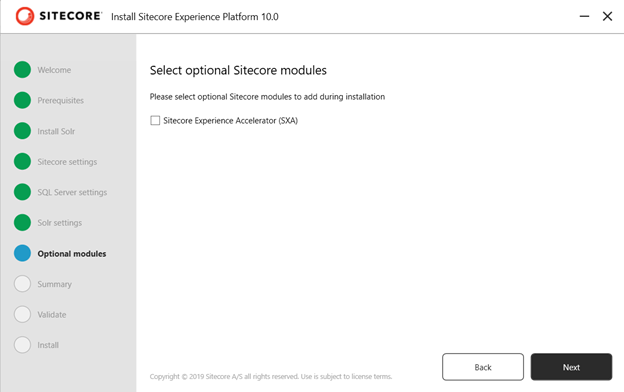

9. In case you want to install SXA then select this option.

10. Review the summary details and then click on Next button.

11. This step will validate all the parameters. Once all parameters will be validated, you can click on Install to start Sitecore installation.

12. Once the installation will be completed, you can open the Sitecore instance.

Log into it by using account admin / Sitecore Admin Password)

If you have any feedback, questions or suggestions for improvement please let me know in the comments section.

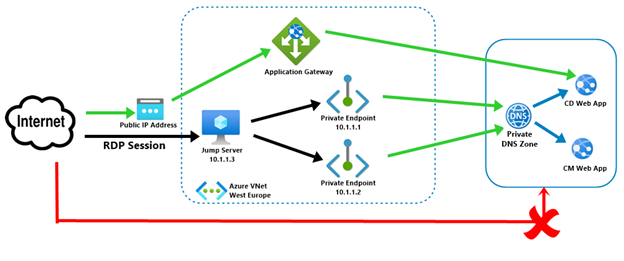

Secure your Sitecore environment using Azure Private Link

Overview of Private Link:

A Private Endpoint is a special network interface (NIC) for your Azure Web App in a Subnet in your Virtual Network (VNet). The Private Endpoint is assigned an IP Address from the IP address range of your VNet. The connection between the Private Endpoint and the Web App uses a secure Private Link.

Private Link enables you to host your apps on an address in your Azure Virtual Network (VNet) rather than on a shared public address. It provides secure connectivity between clients on your private network and your Web App. By moving the endpoint for your app into your VNet you can:

1. Isolate your apps from the internet: Configuring a Private Endpoint with your app, you can securely host line-of-business applications and other intranet applications.

2. Prevent data exfiltration: Since the Private Endpoint only goes to one app, you don’t need to worry about data exfiltration situations.

Referemce: https://docs.microsoft.com/en-us/azure/app-service/networking/private-endpoint

If you just need a secure connection between your VNet and your Web App, this is the simplest solution. If you also need to reach the web app from on-premises through an Azure Gateway, a regionally peered VNet, or a globally peered VNet, Private Endpoint is the solution.

Private Link vs App Service Environment: The difference between using Private Endpoint and an ILB ASE is that ASE can host many apps behind one VNet address while Private Endpoint can have only one app behind one address.

To access your Sitecore instances (CD or CM roles) in your VNet was previously possible via ILB App Service Environment or Azure Application Gateway with an internal inbound address. In this article, we will create Private Endpoint & isolate Sitecore environment from the Internet.

Once we will implement the above architecture, then:

1. Sitecore CD & CM web app’s public access will be disabled.

2. User can access CD instance via Application gateway public IP address. User needs to map his domain name with Application gateway.

3. To access CM instance, users need to use RDP session via Jump Server.

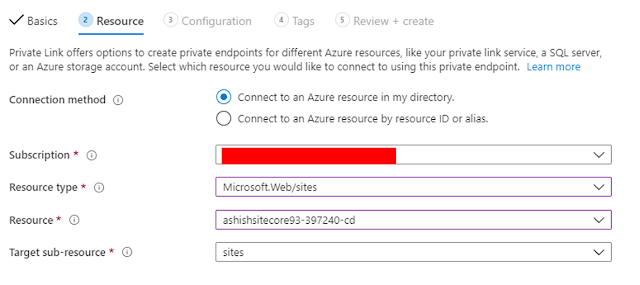

Follow the below steps to configure Azure Private Link for Sitecore web instances:

1. Go to CD instance’s App service plan & change the plan to P1v2. Currently, Private endpoint is only available in PremiumV2 plans.

2. Create Private Endpoint. Select your Subscription & Resource Group and then enter Endpoint name & select your location as shown below.

3. In Resource type, select Microsoft.Web/sites and then select your CD instance.

4. I already have Virtual Network, hence I have selected the existing virtual network. Then configure your Private DNS integration as illustrated below.

5. Perform the above steps (1-4) for CM instance.

6. Open your CD & CM instance in your browser. Now you will be unable to access CD & CM instances.

7. Create a virtual machine in same Virtual Network. Open the RDP port so that this VM can act as Jump Server.

8. Once VM will be deployed, create a RDP session and browse your CD & CM instance. You should be able to access both CD & CM instances.

9. To access CD instance publicly, create an Azure Application Gateway.

9.1. Select the same Virtual Network that you have selected in above steps.

9.2. Create new Public IP address

9.3. In backend pool, select your CD instance.

9.4. Add routing rule. Create a listener and upload HTTPs certificate as shown below.

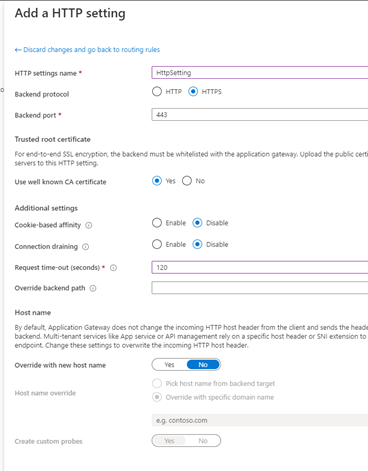

Create HTTP setting as illustrated below.

9.5. Once Application gateway will be deployed, check your backend health.

10. Navigate Application Gateway’s IP address in browser. You will be able to access CD instance publicly and your instance is secured by Private IP & your requests go through a Virtual Network.

I hope this information helped you. If you have any feedback, questions or suggestions for improvement please let me know in the comments section.

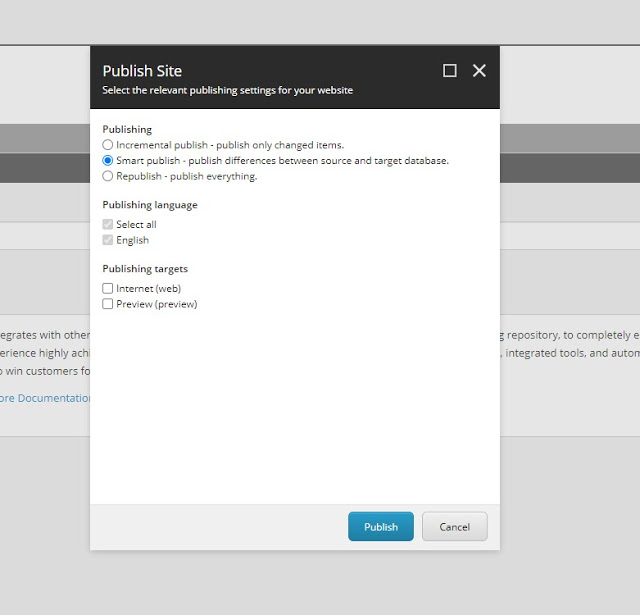

Publish items to a Preview Publishing target

Whenever there is a requirement to review the site changes before publishing it to live site. In this scenario, we need to enable normal view mode for our users, so that they can review changes before reaching to final state of workflow to publish to public site. This can be done using Sitecore\’s feature Preview Publishing Target. Adding a preview publishing target in Sitecore will allow your content approvers to preview it before making it available for whole world through your website.

Refer the below steps to configure preview publishing target:

1. Clone your CD instance. Refer this article which describes about how to clone your Azure web app: https://www.iamashishsharma.com/2020/08/cloning-azure-app-services.html

2. Export your web database & import it named as preview-db in SQL Server.

3. Replace the web database connection string with preview database in CD instance. For example:

4. Add preview database connection string in CM instance

5. Add database node in Sitecore.config in CM instance inside databases node as refer below. (search in Sitecore.config)

$(id)

Images/database_web.png

true

publishing

100MB

50MB

2500KB

50MB

2500KB

$(id)

6. Then Add eventQueueprovider node inside eventing node (Search for )

7. Add propertystore in Sitecore.PropertyStore.config in CM instance

8. Create a new folder “Preview” inside App_Config/Include folder. Then create new config Sitecore.ContentSearch.Solr.Index.Preview.config in App_Config/Include/Previewfor Solr preview indexing \”sitecore_preview_index\” in both CD & CM instance. Copy the below content in config file.

<configuration xmlns:patch="http://www.sitecore.net/xmlconfig/\” xmlns:role=\”http://www.sitecore.net/xmlconfig/role/\” xmlns:search=\”http://www.sitecore.net/xmlconfig/search/\”>

preview

true

$(id)

$(id)

preview

/sitecore

false

false

Perform in above step in both CD & CM instances.

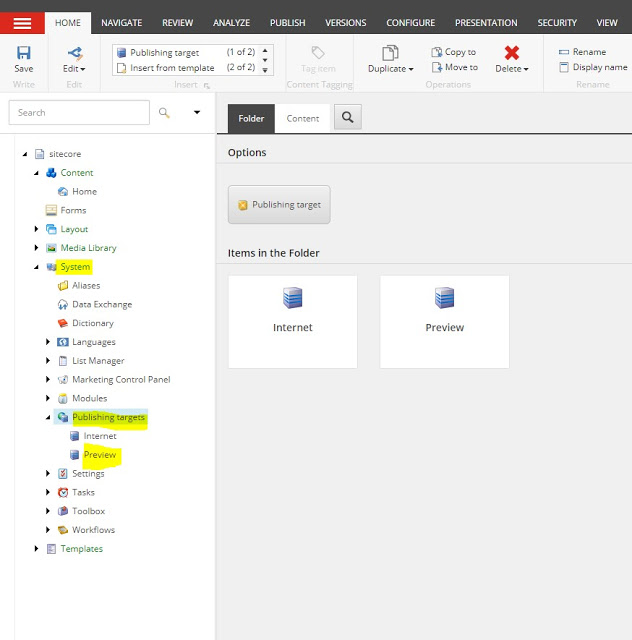

9. Now Login to CM instance-> Content Editor. Then go Sitecore\\System\\Publishing Targets & Create new Publishing target & add Target database \”preview\”.

Then, validate the changes by publishing an item. It will also show “Preview” as publishing target.

10. Login to Solr and add new collection \”sitecore_preview_index and restart CM instance.

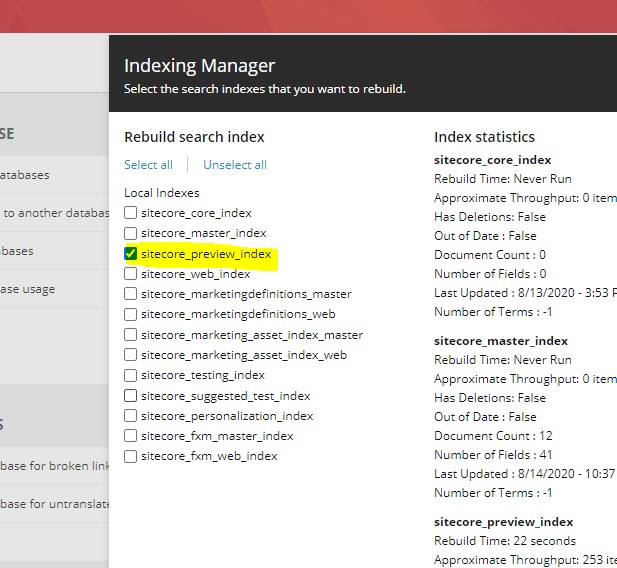

Validate the changes by rebuilding the search index. The index “sitecore_preview_index” can be shown as shown in below image.

I hope this information helped you. If you have any feedback, questions or suggestions for improvement please let me know in the comments section.

Cloning Azure App Services

Azure Web App Cloning is the ability to clone an existing Web App to a newly created app that is often in a different region. This will enable customers to deploy a number of apps across different regions quickly and easily.

Follow the below steps to clone your existing web app:

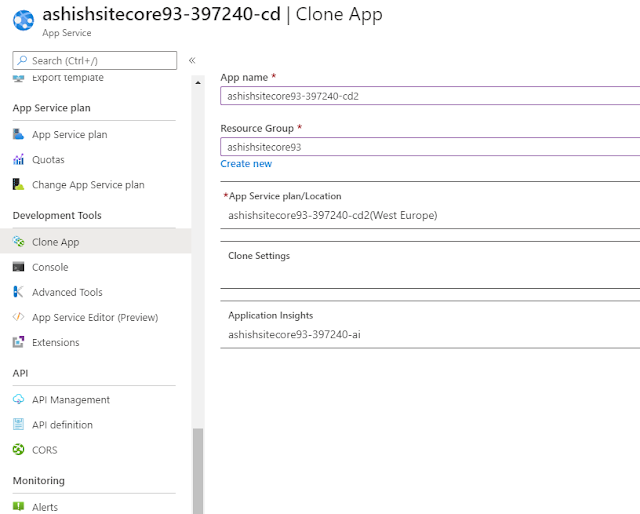

1. Go to App Service and click on Clone as shown below.

2. Enter your App name and select your Resource group.

3. You can select existing App Service plan or you can create a new one. Here, I have created a new App service plan in West Europe location of S1 Standard tier.

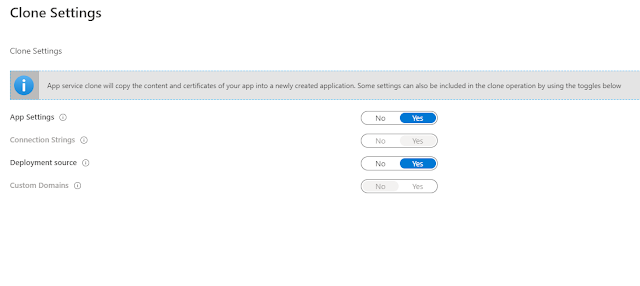

4. Click on Clone Settings & select the required settings you want to clone to new App service.

5. Select your existing Application Insights as illustrated below.

You can also create a new Application insight.

6. Click on Create, then your new App service will be created in few minutes.

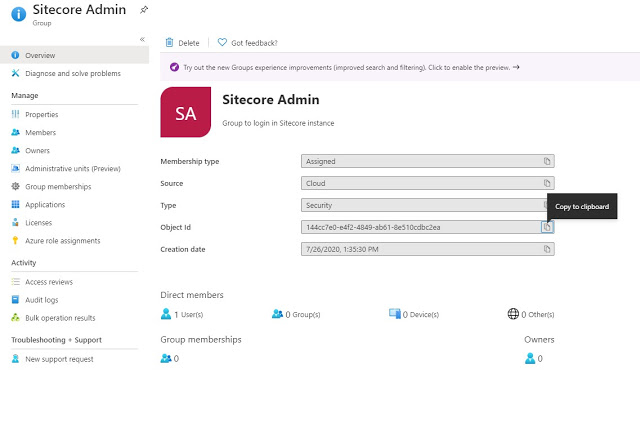

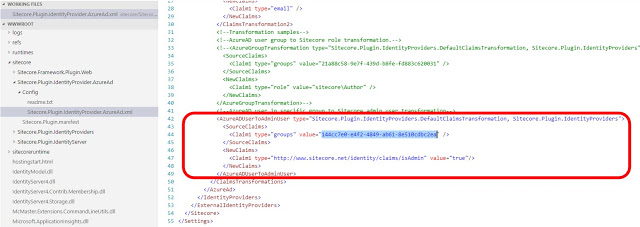

Login to Sitecore instance using Azure Active directory

1. Create Application in Azure AD

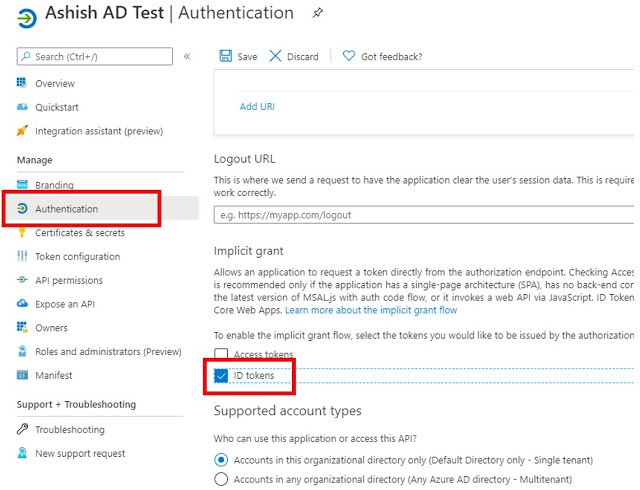

Create an application in Azure Active directory and in Redirect URI, add the URL of your Sitecore Identity resource with suffix \”/signin-oidc\”.

Comparison between Azure Search & Solr

|

Azure Search

|

Solr

|

|

|

Max Simple fields per index

|

1000

|

No Limit

|

|

Max Complex collection fields per index

|

40

|

No Limit

|

|

Max elements across all complex collections per document

|

3000

|

No Limit

|

|

Max depth of complex fields

|

10

|

No Limit

|

|

Max suggesters per index

|

1

|

No Limit

|

|

Max scoring profiles per index

|

100

|

No Limit

|

|

Max functions per profile

|

8

|

No Limit

|

Sitecore Content Hub

This product is still relatively unknown within the marketplace. So let’s discuss some components of Content Hub.

Content Crisis:

The situation of content crisis can be defined in the below problems:

- Unable to deliver right content to right people on right time through right channels.

- Lack of collaboration

- Unable to reuse & lot of duplicate content

- Most of the time spending on content finding

To mitigate this issue, Sitecore introduced Content Hub which is a complete SaaS solution, to do 360 degree Marketing Content Management. It includes several modules such as a PIM module, Content module, Project module and has Print & DRM capabilities/modules.

Content hub covers the entire content life cycle and manage every aspect of your marketing content for all your channels with a single, integrated solution. It presents four main capabilities:

- Digital Asset Management

- Content Marketing Platform

- Marketing Resource Management

- Product Content Management

Components

Digital Asset Management:

- Solution of storage, management, distribution & control of digital assets.

- Machine learning helps to tag your content

- Search & find your assets quickly & easily.

- Includes integrated Digital rights management to ensure full compliance

- Handle any media type from photos, videos, source files, diagrams and more.

Content Marketing Platform

- Effectively plan, manage & collaborate on content strategy.

- Optimize content usage and distribute to target audiences across channels

- Workflow process is available

- Provides overall view of all content & how it is performing

Marketing Resource Management

- Manage, budget, & control every phase of marketing project

- Marketers can define and structure projects, campaigns, allocate budget & assign to stakeholders

- Stakeholders can participate in process of content creating, reviewing, proofing & approving

- Steer teams to achieve production targets on time with intuitive collaboration, review & approval tools

Product Content Management

- Stores information related to products and manages your product marketing lifecycle

- Centralise & automate all maintenace & management of product related content

- Leverage existing product content as part of your processes for creating new content

For more detailed information related to Content Hub, refer the below articles:

- Sitecore Content Hub documentation portal

- Sitecore Content Hub product

- What is Sitecore Content Hub

- SITECORE CONTENT HUB – MORE THAN JUST A DAM

In next article, we will discuss more on Content hub connectors & integration.

Certified Kubernetes Administrator

Azure Synapse Analytics

| Limitless scale | GA | Preview |

| Provisioned compute (data warehouse) | Available | NA |

| Materialized views & result-set cache | Available | NA |

| Workload importance | Available | NA |

| Workload isolation | Available | NA |

| Serverless data lake exploration | NA | Available |

| Powerful insights | ||

| Power BI integration | NA | Available |

| Azure Machine Learning integration | NA | Available |

| Streaming analytics (data warehouse) | NA | Available |

| Apache Spark integration | NA | Available |

| Unified experience | ||

| Hybrid data ingestion | NA | Available |

| Azure Synapse Studio | NA | Available |

| Instant Clarity | ||

| Azure Synapse Link | NA | Available |

| Unmatched security | ||

| Column- and row-level security | Available | NA |

| Dynamic data masking | Available | NA |

| Private endpoints | Available | NA |

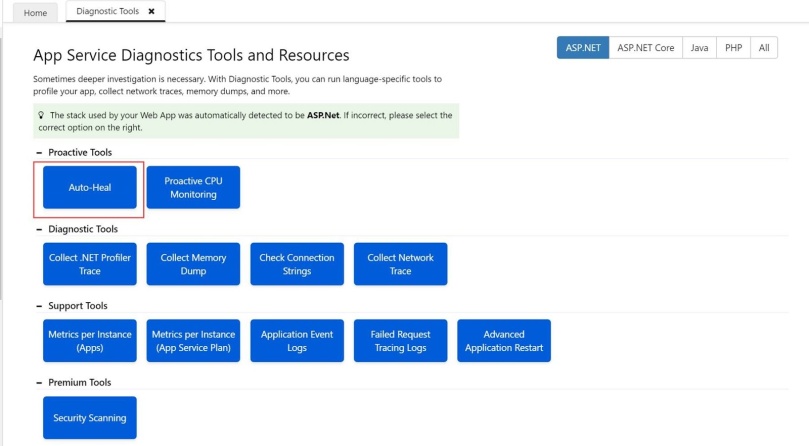

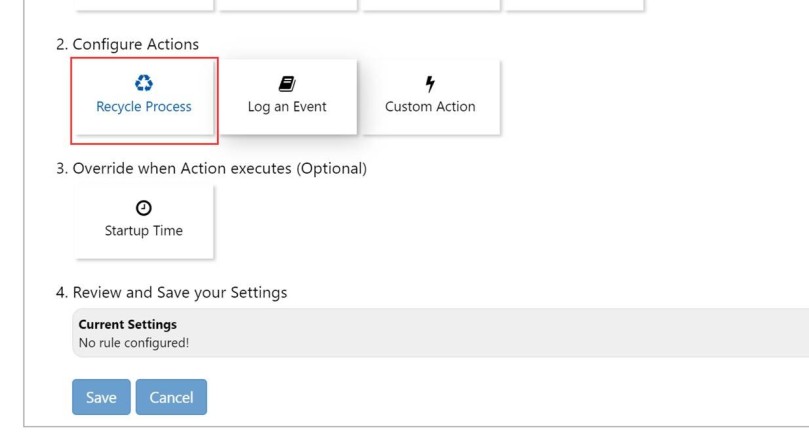

Mitigate 5XX error using Auto heal feature

Docker announces collaboration with Azure

docker login Azure

docker context create aci-westus aci --aci-subscription-id xxx --aci-resource-group yyy --aci-location westus

docker context use aci-westus

- Easily log into Azure directly from the Docker CLI

- Trigger an ACI cloud container service environment to be set up automatically with easy to use defaults and no infrastructure overhead

- Switch from a local context to a cloud context to run applications quickly and easily

- Simplifies single container and multi-container application development via the Compose specification allowing a developer to invoke fully Docker compatible commands seamlessly for the first time natively within a cloud container service

- Provides developer teams the ability to share their work through Docker Hub by sharing their persistent collaborative cloud development environments where they can do remote pair programming and real-time collaborative troubleshooting

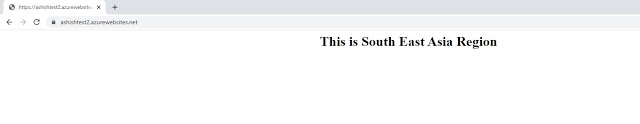

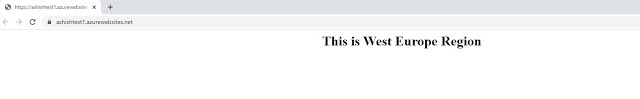

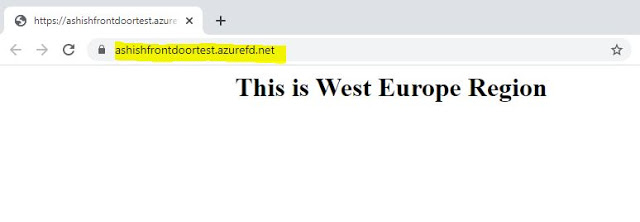

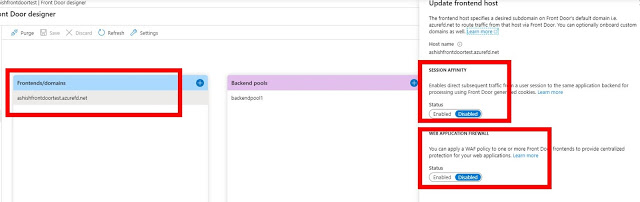

Azure Front Door Deployment using ARM Template

- Download this git repo and fill the parameters in azuredeploy.parameters.json as shown below:

- Run the below PowerShell command to provision the AFD.

{

\"$schema\": \"https://schema.management.azure.com/schemas/2019-04-01/deploymentParameters.json#\",

\"contentVersion\": \"1.0.0.0\",

\"parameters\": {

\"frontDoorName\": {

\"value\": \"ashishfrontdoortest\"

},

\"dynamicCompression\": {

\"value\": \"Enabled\"

},

\"backendPools1\": {

\"value\": {

\"name\": \"backendpool1\",

\"backends\": [

{

\"address\": \"ashishtest1.azurewebsites.net\",

\"httpPort\": 80,

\"httpsPort\": 443,

\"weight\": 50,

\"priority\": 1,

\"enabledState\": \"Enabled\"

},

{

\"address\": \"ashishtest2.azurewebsites.net\",

\"httpPort\": 80,

\"httpsPort\": 443,

\"weight\": 50,

\"priority\": 2,

\"enabledState\": \"Enabled\"

}

]

}

}

}

}

Login-AzureRmAccount

$ResourceGroupName = \"\" #Enter Resource group Name

$AzuredeployFileURL=\"\" #File path of azuredeploy.json

$AzuredeployParametersFile = \"\" #File path of azuredeploy.parameters.json

Set-AzureRmContext -SubscriptionId \"\" #Subscription ID

New-AzureRmResourceGroupDeployment -ResourceGroupName $ResourceGroupName -TemplateFile $AzuredeployFileURL -TemplateParameterFile $AzuredeployParametersFile

Application Change Analysis

How to enable Change Analysis Feature

- Navigate to your Azure Web App in the Azure Portal

- Select Diagnose and Solve problems from the Overview Page and click on Health Check

- Click on Application Changes as shown in below image.

- It will open Application Changes and now enable your application changes as illustrated below.

- Whenever there will be any changes in the application, you can go to Application Changes again and click on Scan changes now.

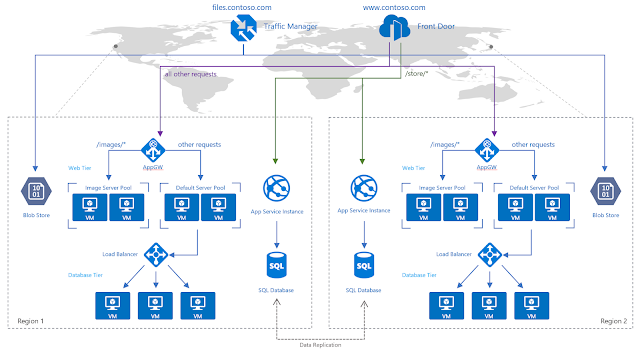

Difference between Azure Front Door Service and Traffic Manager

In this article, I will compare Azure Front Door to Azure Traffic Manager in terms of performance and functionality.

Similarity:

Azure Front Door service can be compared to Azure Traffic Manager in a way that this also provides global HTTP load balancing to distribute traffic across different Azure regions, cloud providers or even with your on-premises.

Both AFD & Traffic Manager support:

- Multi-geo redundancy: If one region goes down, traffic routes to the closest region without any intervention.

- Closest region routing: Traffic is automatically routed to the closest region.

Differences:

- Azure Front Door provides TLS protocol termination (SSL offload), and Azure Traffic Manager does not. It means AFDs take load off from the Web Front Ends, which do not have to encrypt or decrypt the request.

- Azure Front Door provides application layer processing, and Azure Traffic Manager does not.

- While using AFS, user will experience better performance than traffic manager as AFD uses Anycast, which provides lower latency, thereby providing higher performance.

- AFD provides WAF feature for your application to provide security from DDoS attacks.

- We can perform URL rewriting in Azure Front Door but not in Traffic Manager.

- Traffic manager relies on DNS lookup for network routing while AFD uses reverse proxy which provides faster failover support.

- AFD caches the static content while no caching mechanism is available in Traffic Manager.

Quick Summary:

| Azure Front Door | Traffic Manager |

| Cross region redirection & availability. | Cross region redirection & availability. |

| SSL offload | No SSL offload |

| Uses Reverse proxy | Uses DNS lookup |

| Caches static content | No caching available |

| Uses AnyCast & Split TCP | Does not use such service |

| AFD provides WAF feature | No such feature |

| 99.99% SLA | 99.99% SLA |

I hope this information helped you. In our next article, we will discuss how to create and use Azure Front Door service.

Cryptocurrency mining attack against Kubernetes clusters

Kubernetes have been phenomenal in improving developer productivity. With lightweight portable containers, packaging and running application code is effortless. However, while developers and applications can benefit from them, many organizations have knowledge and governance gaps, which can create security gaps.

Some of the Past Cases of Cryptocurrency on Kubernetes cluster:

Tesla Case: The cyber thieves gained access to Tesla\’s Kubernetes administrative console, which exposed access credentials to Tesla\’s AWS environment. Once an attacker gains admin privilege of the Kubernetes cluster, he or she can discover all the services that are running, get into every pod to access processes, inspect files and tokens, and steal secrets managed by the Kubernetes cluster.

Jenkins Case: Hackers used an exploit to install malware on Jenkins servers to perform crypto mining, making over $3 million to date. Although most affected systems were personal computers, it’s a stern warning to enterprise security teams planning to run Jenkins in containerized form that constant monitoring and security is required for business critical applications.

Recently, Azure Security Center detected a new crypto mining campaign that targets specifically Kubernetes environments. What differs this attack from other crypto mining attacks is its scale: within only two hours a malicious container was deployed on tens of Kubernetes clusters.

There are three options for how an attacker can take advantage of the Kubernetes dashboard:

- Exposed dashboard: The cluster owner exposed the dashboard to the internet, and the attacker found it by scanning.

- The attacker gained access to a single container in the cluster and used the internal networking of the cluster for accessing the dashboard.

- Legitimate browsing to the dashboard using cloud or cluster credentials.

How could this be avoided?

As per Microsoft\’s Recommendations, follow the below:

- Do not expose the Kubernetes dashboard to the Internet: Exposing the dashboard to the Internet means exposing a management interface.

- Apply RBAC in the cluster: When RBAC is enabled, the dashboard’s service account has by default very limited permissions which won’t allow any functionality, including deploying new containers.

- Grant only necessary permissions to the service accounts: If the dashboard is used, make sure to apply only necessary permissions to the dashboard’s service account. For example, if the dashboard is used for monitoring only, grant only “get” permissions to the service account.

- Allow only trusted images: Enforce deployment of only trusted containers, from trusted registries.

Refer: Azure Kubernetes Services integration with Security Center

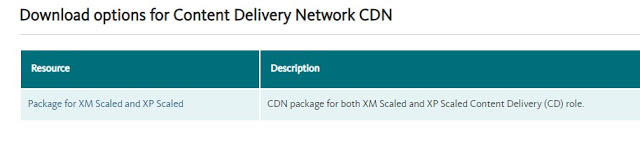

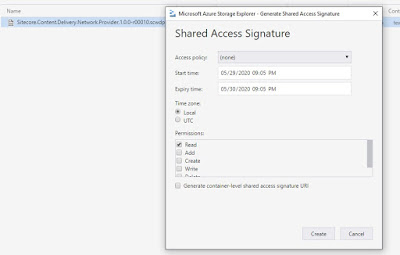

Deploy Azure CDN in existing Sitecore environment using Arm Template

Refer the below steps to deploy Azure CDN:

{

\"$schema\": \"https://schema.management.azure.com/schemas/2015-01-01/deploymentParameters.json#\",

\"contentVersion\": \"1.0.0.0\",

\"parameters\": {

\"cdWebAppName\": {

\"value\": \"<>\"

},

\"cdnSKU\": {

\"value\": \"<>\"

},

\"cdnMsDeployPackageUrl\": {

\"value\": \"<>\"

}

}

}

|

cdWebAppName

|

Name of CD instance

|

|

cdnSKU

|

Premium_Verizon, Standard_Verizon, Standard_Akamai, Standard_Microsoft

|

|

cdnMsDeployPackageUrl

|

SAS token of CDN WDP

|

Login-AzAccount

$ResourceGroupName = \"<<Enter Resource group name"

$AzuredeployFileURL=\"https://raw.githubusercontent.com/Sitecore/Sitecore-Azure-Quickstart-Templates/master/CDN%201.0.0/xp/azuredeploy.json\"

$AzuredeployParametersFile = \"<<path of above created parameters.json file"

Set-AzContext -Subscription “<>”

New-AzResourceGroupDeployment -ResourceGroupName $ResourceGroupName -TemplateUri $AzuredeployFileURL -TemplateParameterFile $AzuredeployParametersFile -Verbose

- Media.AlwaysIncludeServerUrl

- Media.MediaLinkServerUrl

- Media.AlwaysAppendRevision

- MediaResponse.Cacheability

- MediaResponse.MaxAge

For more details about CDN setup in Sitecore refer the below articles:

https://doc.sitecore.com/developers/92/sitecore-experience-manager/en/enabling-cdn.html

https://doc.sitecore.com/developers/93/sitecore-experience-manager/en/cdn-setup-considerations.html

Copy Data from Azure Data Lake Gen 1 to Gen 2 using Azure Data Factory

Prerequisites:

- Azure Data Lake Gen1

- Data Factory

- Azure storage with Data Lake Gen2 enabled

Refer the below steps to copy your data:

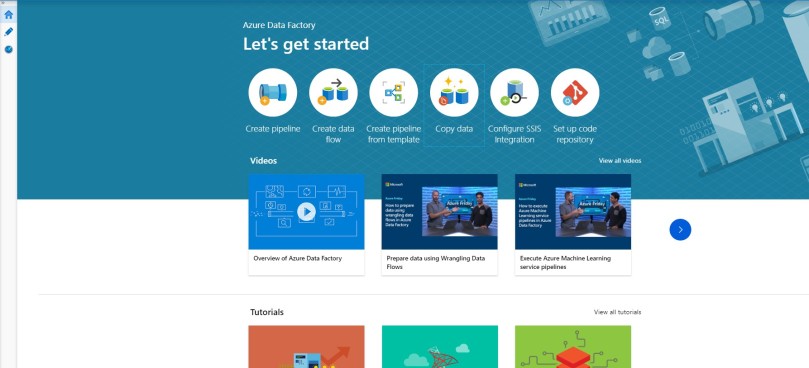

- Open your Azure portal and go to Data Factory then click on Author & Monitor.

- It will open a Data Integration Application in new tab. Now click on Copy Data.

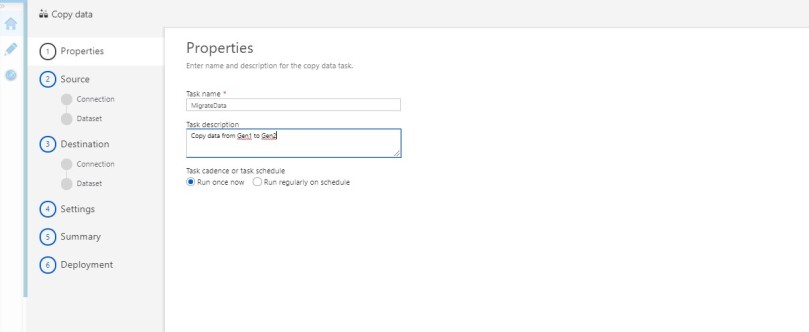

- Fill the details in the Properties page and click Next

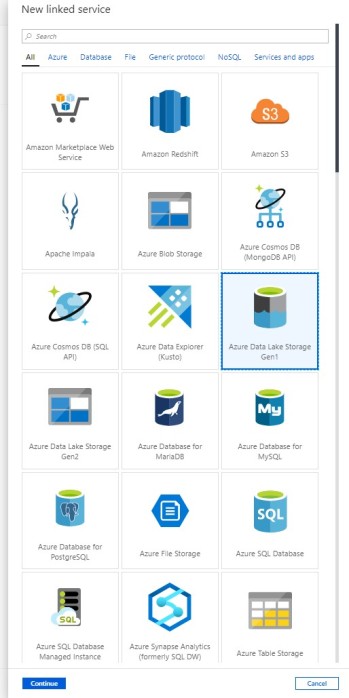

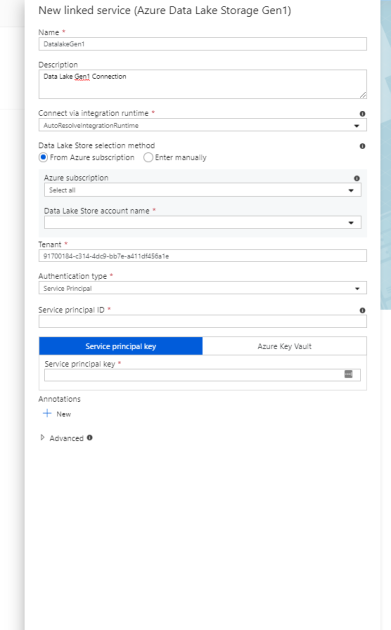

- Select the source, then Create a new connection for Data Lake Gen1

- Fill out the required parameters. You can use either Service Principal or Managed Identity for Azure resources to authenticate your Azure Data Lake Storage Gen1.

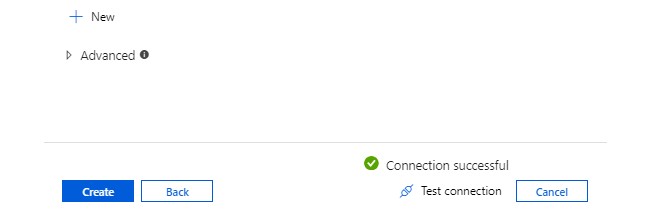

- Test the connection, then click Create

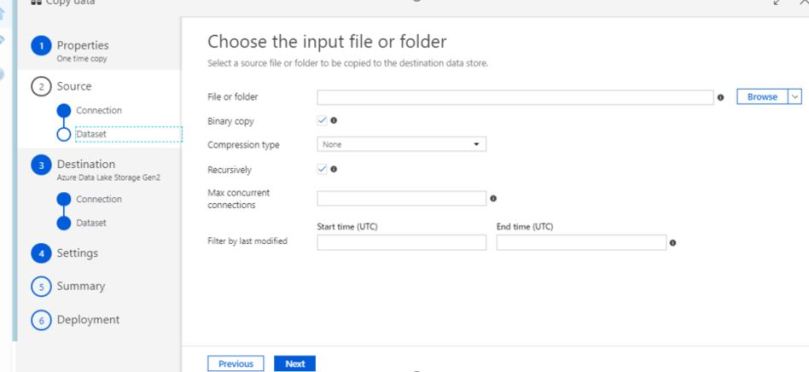

- Select your folder in the Dataset as shown below

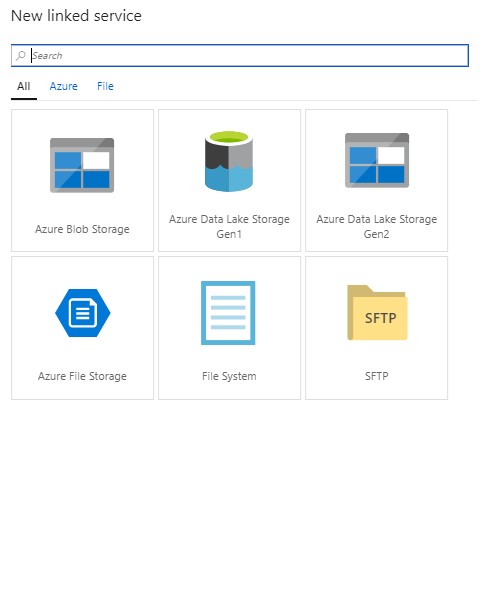

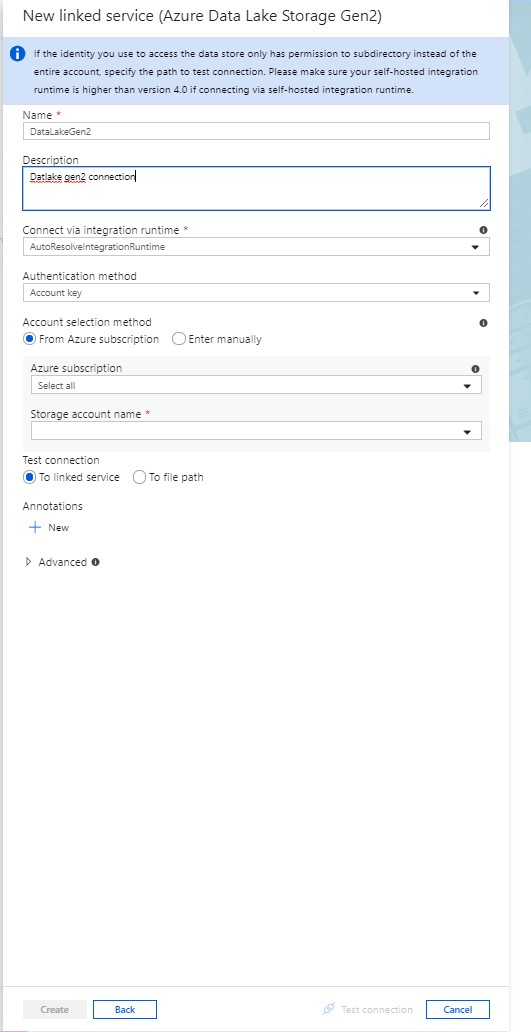

- Select the destination & create a new connection for ADLS Gen2.

- Fill out the required parameters. You can use either Service Principal or Managed Identity for Azure resources to authenticate your Azure Data Lake Storage Gen1

- Test the connection, then click Create

- Select the destination & select the folder in the Dataset then click Next

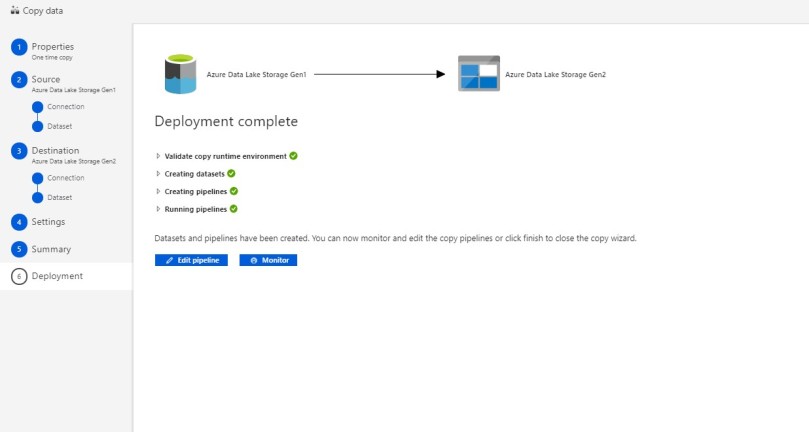

- Check and Verify the Summary, then click Next

- Pipeline will be executed right away.

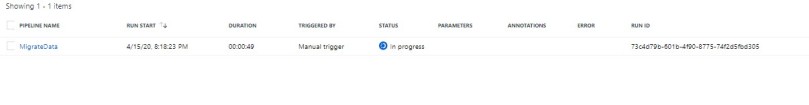

- Monitor the Status

- Navigate to the Monitor tab, and see the progress

- You can also view more details by viewing the Activity Runs and view Details

As always do let us know if you have questions or comments using the comments section below!