In this tutorial, we will learn how to deploy container image into Kubernetes cluster using Azure Kubernetes Service. I am assuming that you have already pushed an image into your Azure container registry.

You may refer the below articles for Docker image & Azure container registry.

NOTE: We will use Azure CLI hence install Azure CLI version 2.0.29 or later. Run az –version to find the version.

- Select your subscription. Replace <> with your subscription id.

az account set --s <>

2. If you have an existing resource group, you can skip this step else you can create a new resource group.

az group create --name <> --location <>

Example:

az group create --name helloworldRG --location westeurope

- Create Azure Kubernetes Service.

az aks create --resource-group <> --name <> --node-count <> --enable-addons monitoring --generate-ssh-keys --node-resource-group <>

Example:

az aks create --resource-group helloworldRG --name helloworldAKS2809 --node-count 1 --enable-addons monitoring --generate-ssh-keys --node-resource-group helloworldNodeRG

While creating an AKS cluster, one more resource group is also created to store AKS resource. For more details, refer Why are two resource groups created with AKS?

- Connect to AKS cluster

az aks get-credentials --resource-group <> --name <>

Example:

az aks get-credentials --name \"helloworldAKS2809\" --resource-group \"helloworldRG\"

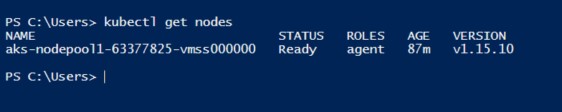

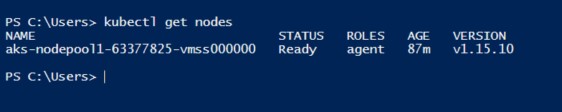

To verify your connection, run the below command to get nodes.

kubectl get nodes

- Integrate AKS with ACR.

az aks update -n myAKSCluster -g myResourceGroup --attach-acr

Example

az aks update -n helloworldAKS2809 -g helloworldRG --attach-acr helloworldACR1809

- Deploy image to AKS.

Create a file hellowordapp.yaml and copy the below content in the file.

apiVersion: apps/v1

kind: Deployment

metadata:

name: helloworldapp

labels:

app: helloworldapp

spec:

replicas: 3

selector:

matchLabels:

app: helloworldapp

template:

metadata:

labels:

app: helloworldapp

spec:

containers:

- name: hellositecore2705

image: helloworldacr1809.azurecr.io/helloworldapp:latest

ports:

- containerPort: 8080

apiVersion: v1

kind: Service

metadata:

name: helloworldapp

spec:

selector:

app: helloworldapp

ports:

- protocol: TCP

port: 80

targetPort: 8080

spec:

type: LoadBalancer

ports:

- port: 80

selector:

app: helloworldapp

You can refer Yaml validator to check your yaml file.

It’s time to deploy our docker image from ACS to AKS.

kubectl apply -f <>

Example:

kubectl apply -f hellowordapp.yaml

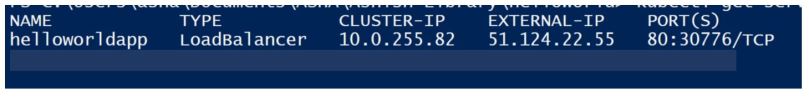

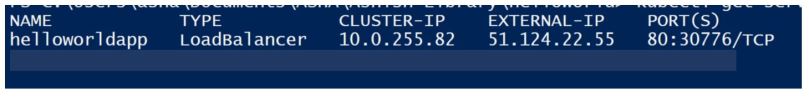

To get the external IP run the command.

kubectl get service helloworldapp

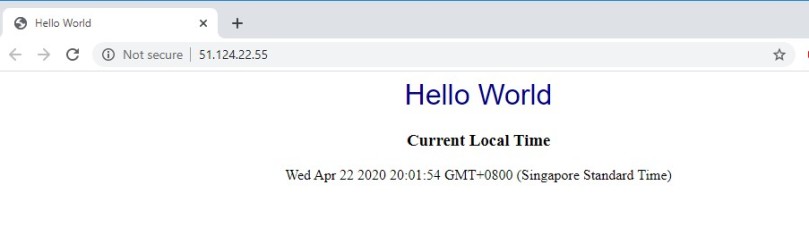

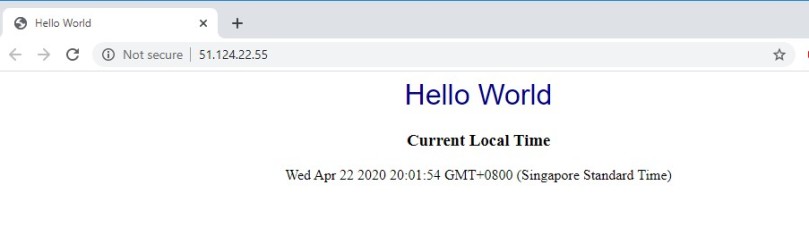

Open the web Brower and navigate to your external IP. The web page will be opened as shown below:

I hope this information helped you. If you have any feedback, questions or suggestions for improvement please let me know in the comments section.